Are the high schools all good?

Thinking about Nex Benedict, surveillance to spot vaping or mental health issues, and going back to speak at mine.

I did the cutest thing yesterday - I went and spoke to the media class at my old high school. Last time I did something like this at a high school I was absolutely eaten alive by a room of girls who honestly could not have given less of a shit about me being there, but this group were so fun and interested and honestly had hot hot takes about the world (and how we could make the 6pm news better.)

Some highlights:

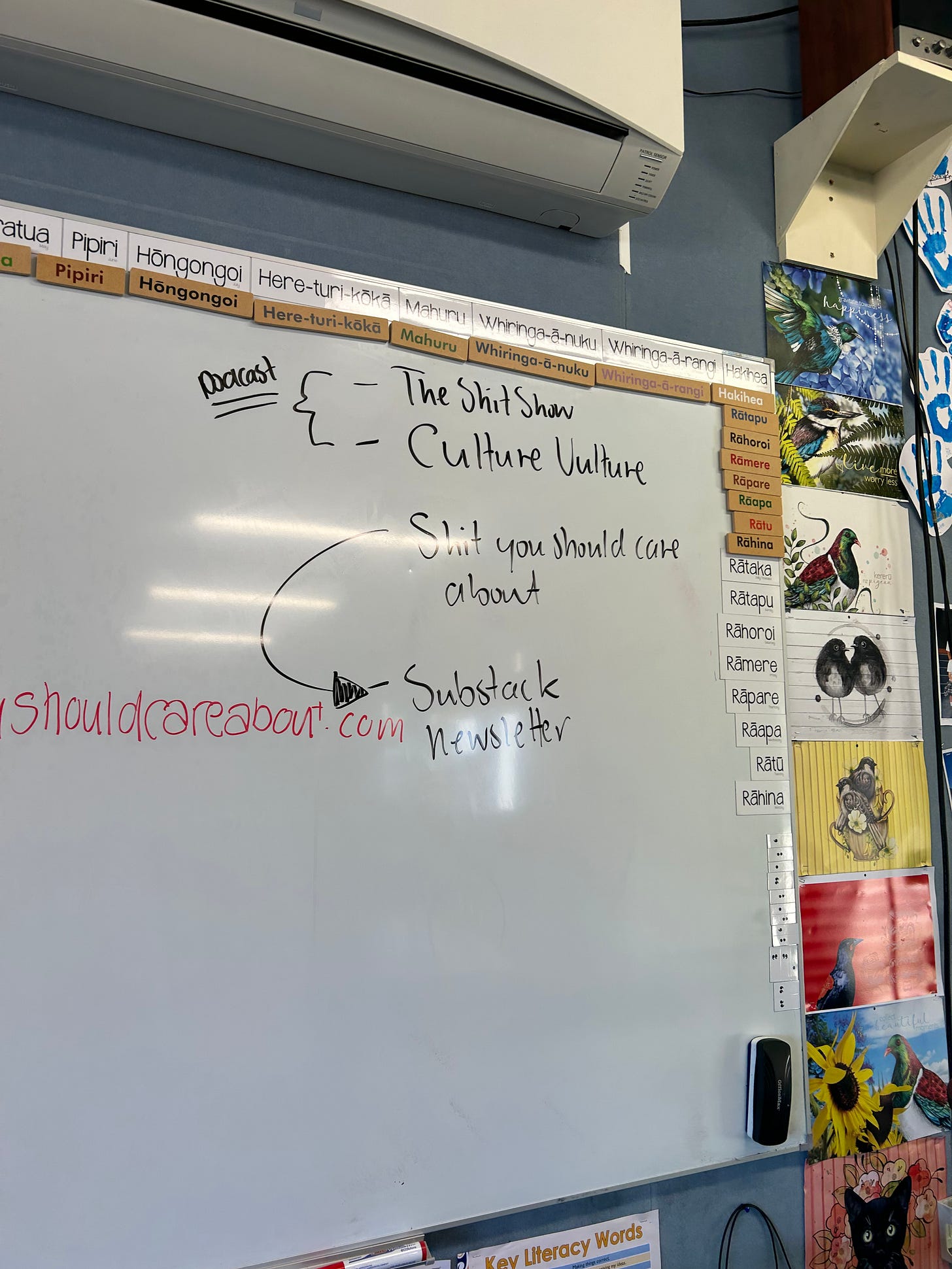

Having to self-promo old school style because our website is blocked on the school wifi:

And a bunch of them are making podcasts for their assessments this year so I gave them some tips I learnt via recording on the bedroom floor for years:

Finished off the night by making friendship bracelets to take to TAYLOR SWIFT IN SYDNEY (have I even mentioned that I’m going to this in the newsy??) (Close Friends knew….)

Comment below if you can guess any of the acronyms 𓆩♡𓆪

⊹˚. ♡.𖥔 ݁ ˖ IN THE NEWS⊹˚. ♡.𖥔 ݁

Thinking about Nex Benedict

Content warning: bullying, transphobia, death.

Over the past few days, I’ve been reading about Nex Benedict, a gender-fluid transgender high school student, who was attacked by bullies in an Oklahoma bathroom and has since lost their life.

To learn more, I turned to who I always turn to when it comes to trans or queer news,

of who handles stories like this both delicately, and with no bullshit. Here’s the account Erin gave:Though details about the specific incident remain sparse, we learned that Nex had been repeatedly bullied at school for being transgender and that the bullying erupted into violence toward them. In what has been described as a "physical altercation," Nex suffered a severe head injury in a high school bathroom at the hands of three girls. Allegedly, No ambulance was called, though Nex was taken to the hospital by their mother and was discharged. They succumbed to their head injury the next morning.

A reflection of anti-trans panic

Nex’s story is fucking heartbreaking and highlights something bigger than another life cut too short. As Erin points out, Nex’s death comes only a month after the god-awful Chaya Raichik (of Libs Of TikTok) was appointed to the Library Media Advisory Committee as part of a plan to “make schools safer” in Oklahoma. But safer for who? Maybe I’m missing something, but safety here seems to be coming at the expense of kids who are already among the most vulnerable.

Concurrently, the state schools superintendent Ryan Walters put out a horrific video the following year calling transgender youth in bathrooms “an assault on truth” and dangerous to other kids. He has been a fierce opponent towards LGBTQ+ people in schools, even going as far as to demand a principal be fired for being a drag queen in his time off. He has also prevented students from changing their gender markers in school records, saying that he “did not want [transgender people] thrust on our kids.”

The hope is that Nex’s story will bring about change, or at the very least will encourage these hateful lawmakers (or influencers, in Libs of TikTok’s case) to open their eyes and look at the repercussions of their hateful agenda. But in a world where people are literally calling for the eradication of trans people, the hope continues to feel further and further away.

Rest in peace, Nex 𓆩♡𓆪

What’s up with all the school surveillance?

Content warning: suicide

Yesterday I read two pieces that blew my mind (lol maybe I’m naive) about how schools are using AI to detect mental health crises, and sensors to detect vaping. Let me elaborate.

AI to spot self-harm

The first piece I read was in TIME and it was titled “Schools Let AI Spy on Kids Who May Be Considering Suicide. But at What Cost?” It starts off by acknowledging that suicide is the second leading cause of death among American youth between the ages of 10 and 14, and that due to a nationwide shortage of mental health professionals, it’s only getting worse. So, one way schools are trying to face these issues is by introducing AI technology to track risk:

As a remedy, school administrators, faced with daunting funding and staffing shortages, have increasingly looked to technology to help them manage the youth suicide crisis. Specifically, companies such as Bark, Gaggle, GoGuardian and Securly have developed technology in the form of AI-based student monitoring software that tracks students’ computer use to identify students facing mental health challenges. It is generally designed to operate in the background of students’ school issued computing devices and accounts, and flag activity that may indicate that they are at risk for self-harm.

Surveillance to detect vaping

The next piece was in Axios and was titled “Schools use surveillance tech to punish vaping.” This one’s a little more straightforward (perhaps even a little more understandable) but essentially, some schools in the states (and in New Zealand too actually) have set up sensors and surveillance cameras to detect vaping - often without informing students.

How it works: When those sensors detect vaping, they activate surveillance cameras outside the bathroom in one district, per AP.

Another company's technology detects an increase in noise in school bathrooms and sends a text alert to school officials. It doesn't record audio.

Rather than give you my hot takes and risk sounding incredibly out of touch, I want to know - what do you think about all this surveillance at school? Fair enough or a bit rough?

☆⋆。𖦹°‧★ ON THE WEB ☆⋆。𖦹°‧★

A Woman’s 50-Part TikTok Series About Her Marriage Is the Internet’s Latest Obsession (TIME)

Billie Eilish Has Thoughts On TikTok Stars — And So Do We (Fashion Mag)

▶︎ •၊၊||၊|။|||| CULTURE VULTURE ▶︎ •၊၊||၊|။||||

Resharing our two-part Taylor Swift series because it feels relevant rn:

☆Other good shit☆

Pre-order our book Make It Make Sense here!

Join our Book Club here!

Become a Close Friend on Instagram here!

Drop a question for our weekend advice column ‘Wait, But What?’ here!

Support the independent media you love by becoming a paid supporter here!

PS, if you wanna chat to me about anything I’ve written today, your latest crush, or if you just want a pen pal, reply to this email and we can be friends 𓆩♡𓆪

I was a social work intern at a school that used Gaggle to flag instances of suicide/SH language in school emails and G-chats. When a convo was flagged, a real person reviewed it and referred the student to us for a risk assessment. It actually added to our workload rather than reducing it because we had to follow up with every student, even if it was clear they were joking or using the words in other context. That meant other kids who needed services may not get to meet with us right away because we had to meet with them to avoid liability in case they actually did harm themselves.

I really feel uncomfortable with this as a student. I love what the potential is, being able to help students without them having to talk first (considering many students don’t know how to talk about mental health needs), but honestly, how can a computer program know what we need any better than we do? And what if it starts assigning medication to kids who don’t know they don’t need it? I just don’t like it.