Today I have something VERY COOL and VERY special for you - a newsy written by one of YOU! A couple of weeks (or maybe months??) back I (boldly) jumped on the mic with Dunc and said that I wasn’t that worried about AI taking my job (because I didn’t think it could connect with you all in the way that I can!) Zoe - who’s much more knowledgeable than me in this field - heard this, and took it as a challenge.

A little bit about Zoe:

Zoe is a 22-year-old Software Engineer currently living in New York City with her dog Olive. She recently graduated from USC with a Bachelor's degree in Computer Science and since then has worked at Meta (where she contributed to the development of PlayTorch, an open-source software that aims to democratize the use of AI) and is now prepping for a role at IndieBloom, a software company focused on helping neurodivergent people accomplish their daily goals with less stress (love that!!!) Zoe loves learning the technical foundations and ethical implications of AI by reading about how models are trained and applying this knowledge to areas of personal intrigue. In the future, Zoe hopes to attend graduate school and pursue a career in academic research.

Could AI write the SYSCA daily newsy? Let’s find out!

When DALL-E 2 (OpenAI’s state-of-the-art AI System that generates realistic images and art from natural language descriptions) was released, my initial reaction was one of pure awe and excitement. As a computer scientist, I was deeply fascinated by the technical mechanics of generative AI and saw its potential to help me express my creativity despite my lack of artistic talent. Liv and Luce’s critique of DALL-E 2 on Culture Vulture changed my perspective.

In case you missed it, Liv and Luce critiqued the impact generative AI will have on human artists, the implications of collecting training data without providing compensation or credit to the original artists, and the lack of innovation in a system that only replicates techniques society has already deemed to be “good art.” So, I was surprised a few months later when I was listening to an episode of The Shit Show and Luce said, “Weirdly I was more worried at the beginning about DALL-E 2… than I was about ChatGPT being able to write the newsletter…Maybe this is a narcissistic thing because… no AI can connect with my audience the way I can or crack the jokes that I can.”

There are a ton of issues with using AI to generate news stories, but I don’t think that GPT’s lack of potential to learn personality and connect with an audience is one of them. As summarized by Dr. Casey Fiesler (@professorcasey), GPT can be thought of as a “stochastic parrot” because instead of generating text based on communicative intent, its output is solely based on a set of statistical patterns in its training data.

SYSCA’s daily newsy is the perfect candidate for replacement by AI, since selecting the most relevant existing news stories and summarizing them in a specific style and tone is exactly what a “stochastic parrot” can be trained to do. I AM BY NO MEANS CALLING LUCE A STOCHASTIC PARROT (probably not a sentence you ever thought you’d see in the newsy, am I right?). I am incredibly grateful for her ability to present important news stories in a fun, accessible, and evidence-based format because it has helped me stay informed about current events without becoming overly consumed by existential dread. The time, personality, and critical thinking that she pours into each edition of her newsletter is the only reason that a stochastic parrot can learn from her work.

In response to Luce’s declaration that no AI could mimic her personality driven content, Dunc said “In the short to medium term, the relationship that people have with you Lucy, and the kind of style and scope, it’s kind of unimaginable… that it could be replicated.” I took this as a challenge.

GPT is fine-tuned using datasets comprised of prompt/completion pairs. It essentially uses a mechanism known as “attention” to pay attention to the context of the prompt and predict the most likely response. When fine-tuning the model, you can update the probabilities associated with each token (word, part of a word, or punctuation mark) to better match your dataset. If you’re interested in more information about fine-tuning, @the.rachel.woods has an awesome explainer on TikTok

The process

The consistent format of the newsletter, Luce’s unique personality, and Gmail’s API made creating a dataset for this task relatively simple. I downloaded 100 SYSCA newsletters and their metadata from my inbox with Gmail’s API and parsed the HTML to extract the newsletter’s text. I created the prompts by combining the date, subject, and “In Today’s Edish” bullet points (I changed the word “edish” to “newsletter” before training the model, so it would have context for the type of content I was trying to generate). For the completion, I used the rest of the newsletter. The most tedious part of the process involved manually reformatting each completion to ensure block quotes were indented correctly, tweets, images, links, daily polls, and podcast descriptions were formatted consistently, and apostrophes and emojis were decoded correctly. Luckily the newsys were entertaining and informative enough that I didn’t get bored. After some additional processing, I uploaded the dataset to OpenAI’s API and eagerly awaited its results.

I wasn’t sure how well the model would perform given that this was my first attempt at fine-tuning GPT, and I only included 100 training examples. I was also concerned that the examples I used were based on information beyond 2021 (the model’s knowledge cutoff) since I didn’t subscribe to the newsletter until 2022. I hoped this wouldn’t be too much of an issue given that my purpose was to teach it to sound like Luce, not learn new information. For my first test case, I looked at the most recent newsletter in my inbox and used its actual date, subject, and “In Today’s Edish” bullet points as a prompt.

Although Luce’s version was undeniably more fun to read (and had accurate information as opposed to making up stories like GPT), I was impressed by the results. I’ve used ChatGPT a lot over the last few months, and the style of this output was very different from what it usually produces. The model clearly learned the structure of the newsletter and how to properly insert and format links, images, and tweets. It also picked up on aspects of Luce’s writing style including her use of British spellings, questions and answers, extra commentary in parentheses, double question marks, capitalizing some words in the middle of sentences, and use of abbreviations like “fave” and “lil.” The following is a combination of unedited but cherry-picked sections from multiple completion attempts using the same prompt:

Section 1: “The Grammys happened”

Though the pop duo Dakota and the song “BABY” were hallucinations, most of the predicted Grammy winners were relevant. I was initially critical of how many images were included, but it made me laugh that the model addressed this by saying it couldn’t decide. The model hallucinated a cohost, Ben, despite the training data consistently referring to Liv (sorry Liv!), but I was impressed that it made a relevant reference to Culture Vulture even though “New episode of Culture Vulture!!” wasn’t in the prompt (some training examples referencing Culture Vulture had this bullet in the prompt, others didn’t). “Keep an ear open” was a creative play on the phrase “keep an eye out” which appeared in the training data and shows that the embedding of Culture Vulture was more closely related to listening than seeing.

Section 2: “Netflix fucked around and found out how much time you waste watching Netflix”

Given the vagueness of the prompt (Netflix fucked around and found out), I was impressed with the story it came up with - and that it made the title of the section more specific. Apparently, the idea that Netflix would attempt to restrict password sharing is too unreasonable for even for GPT to predict. I liked that this section followed a bullet point format because it worked well with the content and matched some of the sections in the training data. Luce would never say “I’m so glad it’s them and not me,” but I do think she would add a short punchy line after the bullet points to wrap up the section with a laugh.

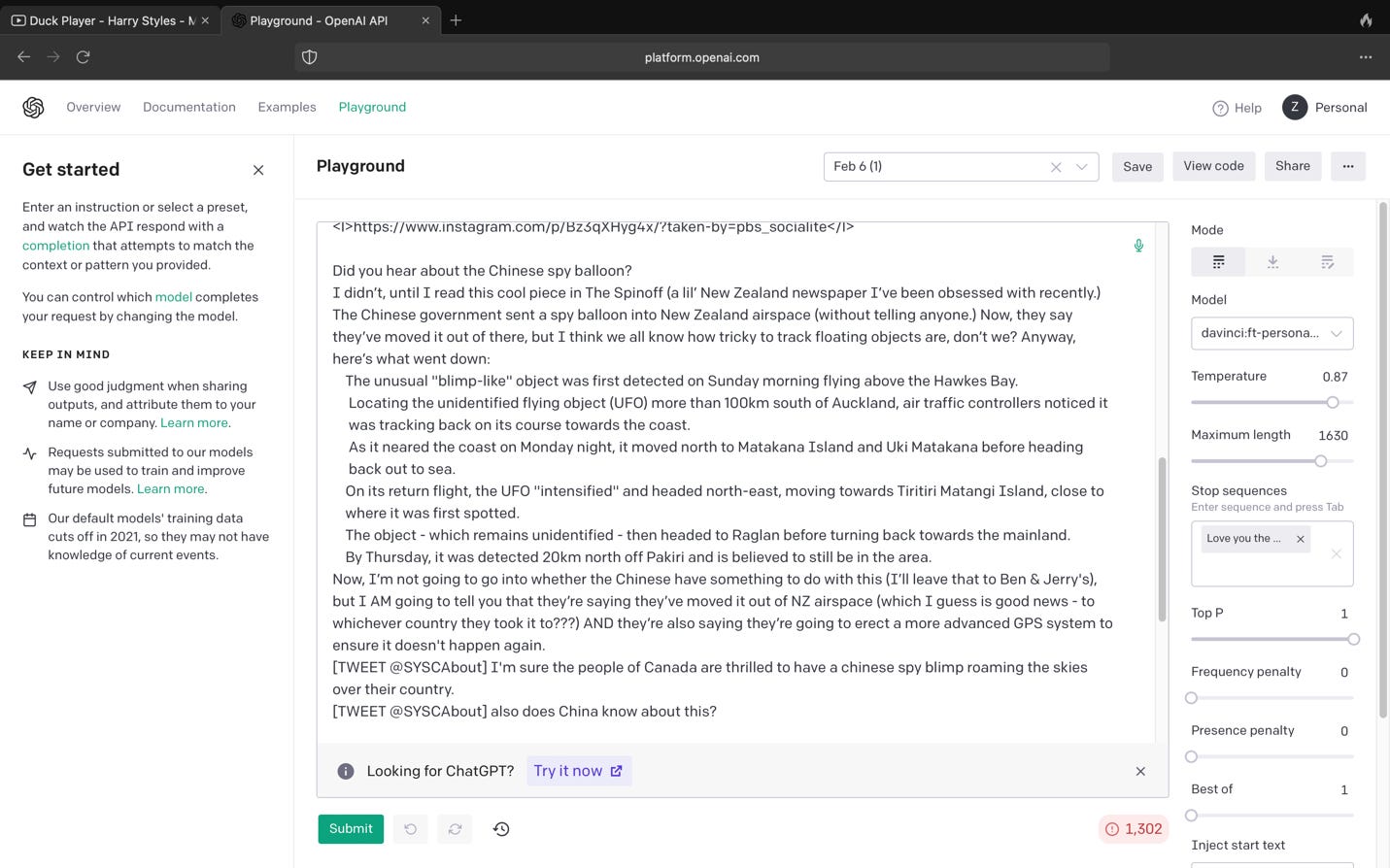

Section 3: “Did you hear about the Chinese spy balloon?”

This section was by far my favorite. I was particularly blown away by the jokes. Ben and Jerry’s did not appear in the training data, but they are known for making political statements and the joke (especially the fact that it was in parentheses) sounds like one Luce would make. I did not like the phrasing “the Chinese” in the sentence “I’m not going to go into whether the Chinese have something to do with this.” However, given that the word “Chinese” only appeared 5 times in the training data and the way GPT stochastically samples tokens, I’m not surprised that the model sampled the common word “have” after the word “Chinese.” With more training examples I think the model would have used more respectful language. The second joke should have said “bad news” instead of “good news”, but I thought the spirit of this joke also showcased Luce’s personality. I was pleasantly surprised by the proper indentation and tone shift for the block quote, though I wish it had used <l>The Spinoff</l> to link to The Spinoff. The generated tweets could have been wittier, but they exhibited proper formatting and placement and used the correct handle for SYSCA.

Section 4: “Are your stomach and gut the same thing?”

I wondered how the model would handle this mundane poll, given that some training examples had links for readers to vote on the mundane poll while others summarized the results of a previous poll. In some completions I generated, the model asked the question and provided voting links, but I chose to include this example because I liked the way it summarized “yesterday’s” results. Each training example ended with “Love you the most, Luce xxx” and in most completions, the model got this phrase correct. Even though this one didn’t, its closing sentence was similar in sentiment and included the “xxx.”

What I found out (after fucking around)

AI - if used correctly - doesn’t have to feel identity-crushing. Liv mentioned on Culture Vulture how she’s read about the jobs that are being automated by machines before, but DALL-E-2 felt so identity-crushing because it hit her personally as an artist. I see how feeling replaceable by a large, generalized AI system would feel that way, but I don’t think it applies when a model needs to be fine-tuned on your own portfolio before being able to replicate your work. The potential for a fine-tuned AI model to learn from your work reinforces the strength and uniqueness of your identity while giving you the tools to increase your impact.

I was initially worried that Luce would be offended by my belief that AI could succeed at writing the newsy, and I was so glad that she was as excited about this project as I was! The highest indicator of success for fine-tuning GPT is the quality of the dataset, and I mean it as the highest compliment when I say AI can learn from SYSCA’s work.

Zoe xxx

loved working on this, tysm for publishing it 🤍 🤍 🤍 🤍

I generally find all this techy stuff hard to read about because 1) it bores me a bit and 2) I often feel to stupid to understand what I am reading but Zoe you really made it easy to read this one, thank you so much this is so interesting!!